Recipe for software testing

The role of software in industrial environments is constantly growing: there is more software, they’re more complex and more dependent on each other. They are often responsible for remarkable or even dangerous machinery. However, on the basis of my diploma work, software testing is not always considered important. It is often a stage that is not taken seriously, and therefore, the first to be neglected when resources and timetables are scarce. Yet the need for testing still exists, and neglecting it can backfire if problems arise close to publication. I studied how functional testing can be turned into an integral part of a mature industrial software project, and how the key functions can be identified and efficiently tested. Because of the large scope of the subject, non-functional testing (performance, data security, etc.) was excluded.

The result is a testing recipe that requires three ingredients: requirements, planning and implementation.

Requirements form the foundation

Testing, especially automated testing, requires a lot of resources. The amount of resources is not always easily justifiable, since the benefits may not be obvious to everyone, especially to people who do not deal with software on a daily basis. In terms of the requirements, the key point is that the entire organization, but especially the management, is made to realize its importance. The most effective way to justify it is to use hard figures and highlight the issues. The hard figures can be, for example, the amount of time spent on fixing bugs, estimates on the costs incurred by errors, or problems with quality. These metrics can be supported with estimates or modelling on how better testing could improve the situation and how quickly savings could be achieved. The second part of the requirements calls project analysis, since testing cannot be done successfully without the necessary resources and expertise in the organization.

On the other hand, it is important to identify the life cycle of each project before deciding on how testing should be conducted. Automated testing has its undeniable benefits: case studies put the ROI at tens or even thousands of percent. In addition to savings in time and money, studies found that the customers were also more satisfied. However, it is unadvisable to plan comprehensive testing automation for projects that are either short or at the end of their life cycles, because resources directed into automation always require a longer payback time than manual testing.

Moving quickly on from the planning stage

For the purposes of the testing plan, is important to analyze the project first at a general level, so that you can find out what you are dealing with, what is important and what may pose problems during implementation.

- Maturity. The most fruitful time for implementing automatic testing is when the project is advanced enough, meaning that the software already has features that are unlikely to undergo any major changes in the future. Implementing automated testing on features that are still subject to change will only result in pointless tests and inefficiency.

- Factors critical to safety. It is important to identify whether a piece of software is directly or indirectly connected to equipment that may result in critical safety issues. The testing of such aspects must always assume top priority. There are a number of these found in industrial environments.

- External factors. Dependence on other software and the need for customization are factors that make testing inherently more difficult. The range of features that are customized or dependent on other software must be known before their testing can be planned. Automated testing of such features may not be impossible, but if the idea is to apply automated testing within the project as efficiently and comprehensively as possible, implementation should be started elsewhere.

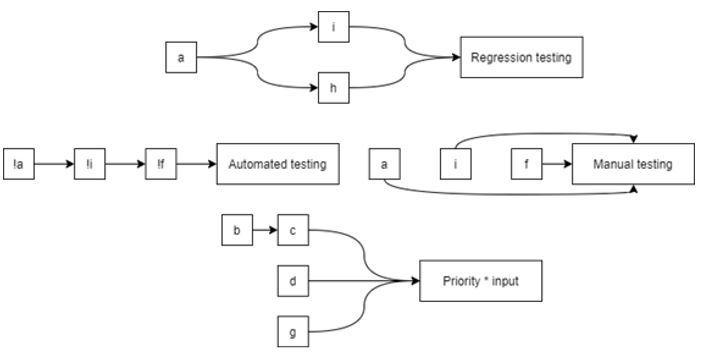

As part of my diploma work, I created a model with which the need for testing and priority can be assessed at the level of individual properties. The purpose of the model was to create a testing plan that was as comprehensive as possible and to get off to an efficient start. The model is based on an evaluation of the software properties on the basis of seven factors: sufficient maturity (a), functional criticality (b), safety criticality (c), dependence on other features (d), dependence on other software (e), customization (f), error history (g), publication frequency (h) and quick development frequency (i).

‘Especially when testing industrial software, it is vital to obtain the software users’ viewpoint and professionalism already at the planning stage. Industrial software is often used by specialists in their fields who have indispensable information about any error history (g), safety-critical interfaces (c) etc.

Before implementation, it must be ascertained how exactly the testing will be carried out. The selection of a testing tool is a sum of many factors: primarily, the tool must cover the testing needs, but it must also be a long-term choice/supported so that it may be used in the future as well, and easy enough to learn by the developers/testers on the basis of their existing expertise.

Implementation takes forever

When developing tests, three basic ideas must be remembered to make testing manageable: standards, reporting of results and reusability. Standards in this context refer primarily to practices within the project or organization: naming conventions, instructions etc. that must be uniform so that tests can be fixed and new people can join in the project as seamlessly as possible. It is easier to justify a resource for testing when the outcome is something tangible: this is why, already when creating the tests, you must consider ways of making the reports easily understandable in ways that don’t require too much effort. A good test will also explain to the uninitiated why it was done and why it was either a success or failure. Reusable components are the bread and butter of software production, and the same principle should be applied also when developing tests.

Test development should be thought of as an ongoing process. Maintenance will not require much if the foundation is sound, but as tools and practices develop, it is also advisable to reassess the testing plans and tools used from time to time.

The recipe for testing in a nutshell

The recipe can be expressed in three parts.

- Requirements:

- Management support. Specific measures are not always required to obtain support, but justification for testing can be modelled by means of various metrics (expense of errors, time saved, customer satisfaction etc.).

- Life cycle and resources. Automation is almost always justified, but owing to the high upfront expense, matters must be weighed carefully in terms of the project’s life cycle and available resources

- Planning:

- Project review. The idea is to go through, among other things, matters that are critical to testing, and issues that may complicate automated testing. Once the model has been created, priorities are assigned to the project’s features based on, for example, their safety criticality and error history.

- Tools. The testing tools must primarily cover the testing needs, but preferably also be easy to learn and have good support.

- Implementation:

- Basic principles. Standards and documentation, reporting of results and reusability form the basis for testing that is easy to maintain and produces meaningful results.

Riku Pääkkönen

Software developer